You may have noticed that the US had an election this week. If you have any interest in statistics, you may also have noticed an election blogger at the NY Times called Nate Silver.

The Economist summarises the story:

The 2012 presidential election went exactly as predicted by the leading quantitative analysts. Nate Silver of the New York Times’s FiveThirtyEight blog, Sam Wang of the Princeton Election Consortium and Drew Linzer of Votamatic all got at least 49 states right.

…

Mr Silver, who has taken the brunt of the backlash over statistical methods in this campaign, has now been vindicated as the finest soothsayer this side of Nostradamus, and is enjoying a nice sales bump for his new book on the art of prediction.

Gawker adds on some commentary:

But… how does he do it?

Using… math. Silver’s “secret” is a proprietary statistical model of presidential elections — basically, a numerical “election simulator” based in part on past elections. He runs the model thousands of times every day, using the latest numbers — polling figures and economic data, mostly — and watching who wins more often, and how frequently. The model’s results are converted into percentages: when Silver wrote that Obama had a 90.9 percent “chance of winning,” he means that Obama won 90.9 percent of the times that Silver ran the model with the most recent data. It’s not quite the same thing as a prediction — Silver’s model could be “correct,” and make the right assumptions, and Romney could still win — but it’s close enough.

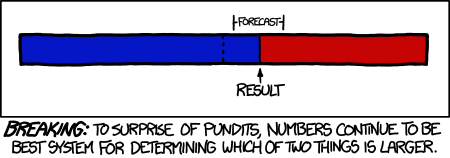

I love that the US media has discovered the usefulness of statistics and mathematical models, and how much better they are than gut feel, even gut feel from people with decades in the business. (Perhaps one day they will extend their discoveries to climate change, but that’s another story).

But I feel this moment deserves some recognition of our own, Australian, election geeks. Not only do they seem to have been doing it longer, they have far more recognition from commentators on both sides of politics.

Antony Green

I have had an actuary crush on Antony Green since he called the 1991 NSW election for the ABC. In those days, he didn’t get to sit at the main table, but instead sat at a subsidiary table with his computer, and was occasionally called for some numbers commentary. These days, he’s sitting at the top table, and when he calls a seat for either side, both sides of politics almost immediately accept the verdict.

“In 1993 the early figures looked bad for Keating when you just looked at the raw numbers but our computer was saying, no, Keating’s back.”

This would not be the last time Green found himself – and of course the entire coverage team – out on a limb on live TV, and not the last time that the clinical crunching of numbers eventually saw him proved right.

“There were members of the ABC board watching the coverage from Sydney who were very concerned that we were saying that the Keating government would be re-elected. There were phone calls being made to Canberra saying the computer is wrong, stop using it – pull the plug on this guy.

“But the computer was right, the model was right.”

Antony Green has been integrating statistics and politics in Australia for 20 years. And he’s had the respect of the commentariat for nearly that long.

Possum Comitatus

Compared with Green, Possum Comitatus is a relatively newbie – more like Nate Silver. He was a member of a group political blog, who specialised in analysing poll data, and after doing it for a while on his own, and outing himself as Scott Steel from Brisbane, became part of the Crikey stable of bloggers. He doesn’t just do polls, he does all sorts of data driven analysis, and occasionally rips apart news commentators with a shaky understanding of arithmetic.

Here’s a typical post where he analyses the relationship between the net satisfaction with the prime minister and the two party preferred vote:

The dynamic between the PMs satisfaction and the government’s vote during the Rudd/Gillard era is utterly dominant. But wait! [nerd time!] It’s so dominant, that even that incredible explanatory power of 89% very likely underestimates the true strength of the relationship because of sampling error and rounding errors in the polls that we’re using for analysis.

…

“But correlation isn’t causation” I hear you say! And you’d be right – so let’s go a step further and look not just at correlation, but peer as best we can into causation.

One of the tests we have available is Granger Causality – which tests whether one time series can predict future values of another time series. It’s not a measure of perfect causality – for no such thing generically exists – but it’s a measure of a practical causality.

Poll Bludger

Poll Bludger is another who analyses polls. The blog (another now part of the crikey stable) is written by William Bowe, from WA. He sticks more to just the stats than Possum Pollytics, but analyses individual seats and pollsters in more detail:

Another feature of the [recent ACT] election to be noted was the poor performance of the only published opinion poll, conducted by Patterson Market Research and published in the Canberra Times during the last week of the campaign. Patterson has a creditable track record with its large-sample polling, despite lacking the match fitness of outfits like Newspoll and Nielsen. On this occasion however the poll was by orders of magnitude in every direction, overstating Labor and the Greens at the expense of the Liberals and “others”.

Raw material and credibility

Australia’s election geeks have a lot less polling data to work with than their US counterparts. But they have a wonderful source of actual election data in the Australian Electoral Commission, which is one of the federal government’s best websites for anyone who loves playing with numbers. And, our analysts, by being extraordinarily good at what they do, have the respect of all sides of politics, and even the more partisan parts of the media. I salute them.